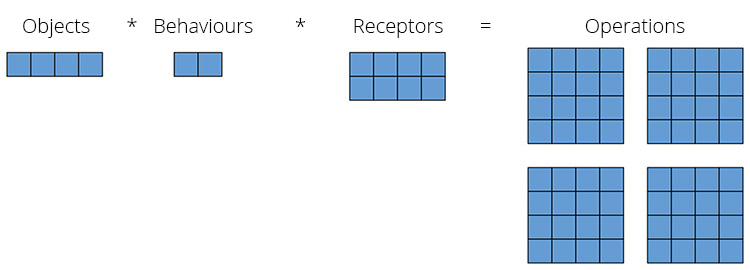

On this page, we enable you a deeper insight regarding the performance of our system. Let's get right to it: The overall computing power needed for one agent depends on the number of perceived objects (m), the number of its behaviours (b) and the receptor count of an agent's sensor (r). So the resulting worst-case complexity for one agent is O(m * b * r). The following Figure 1 should illustrate what that means concerning the number of operations for our system to make a proper (movement) decision.

Figure 1: A visual illustration of the worst-case complexity for 4 objects which are perceived by an agent having 2 different behaviours and a sensor with 8 receptors.

The most obvious performance factor is the sensor resolution. The agent has to do at least one dot product per receptor for each behaviour and for each percept.

Hence, we advise to set the receptor count as low as it is feasible for your game (or simulation). Since the sensors are scriptable objects, they are shared between the agents referencing them. Therefore, it is possible to exchange the sensors for agent groups at runtime. For instance, agents which are far away from the player do not need very precise calculations, thus, they can have a sensor with a lower receptor count. Typically, agents which are near the player should behave more reliable, so they might need a higher receptor count.

The performance for each behaviour varies depending on the complexity. For example, a simple Seek behaviour just calculates a direction by subtracting two vectors. As against a Planar Avoid uses more operations, so it also has to write two resulting directions which makes it more expensive.

Another important factor is the number of behaviours which are simultaneously active for an agent. If a behaviour component is disabled in the inspector, it it is not updated at all. That is why it is perfectly fine to attach a great number of behaviours and choose an active set for different situations, e.g., via a finite-state machine.

Most behaviours have a property called manual-aim-behaviour-steering-foreachreceptor. If it is enabled, the calculations of a behaviour are more expensive in general, whereby the actual performance cost depends on the receptor count. However, this property must only be used if the receptors' positions are important for your agents. This is most likely the case if sensor receptors does not have the same positions as, e.g., for line-shaped sensors.

Perception is another important aspect when it comes to system performance, so in other words "how many objects every agent perceives". As already mentioned, it is a significant scaling factor for both the behaviour performance (and the receptor count). However, another important aspect we want to point out here is the data extracting mechanism of percepts.

Moreover, we advise to restrict the number of perceived objects for an agent as much as possible. The most convenient way is to adapt the radii of the radius steering behaviours, then, these behaviours act as a simple filter. Every object which is too far away or too close would be ignored which can save a lot of performance regarding operations which take place within behaviours.

Another tool for organizing the agent perception are Environment components. With the help of these components, it is possible to separate larger scenes into multiple environments so that an agent only perceives relevant objects in general. The advantage of fragmenting the scene is that the creation of unnecessary percepts is avoided, especially when objects are static. If you rely only on the RadiusSteeringBehaviour, the unpacking of the percept data would still happen. It is very important to understand that it is much better to filter objects before they are unpacked because the data extraction itself also needs some computational power.