A very important issue when working with our system is how to get data from the scene to the AI agents and their behaviours. It is not possible to directly access game object references within our AI behaviours when these behaviours should be thread-safe. The reason is that all Unity classes are not thread-safe, only structs are. Because of this, it is inevitable to extract the data from Unity's objects to a so called percept. Currently, there is only one percept type used together with steering behaviours: The SteeringPercept.

A percept is a data container that provides the necessary information for a behaviour to work properly, whereby most behaviours in our system execute their algorithms at least once for each percept they receive. A percept can be created and handed over to a behaviour by the following two different methods, whereby these mechanisms are described in detail at the environment manual page.

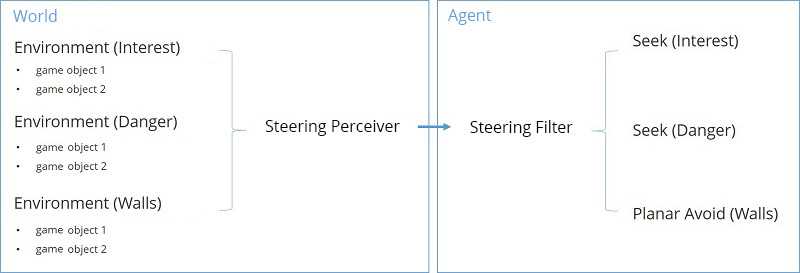

The perception pipeline works as follows and illustrated in Figure 1.

AIMEnvironment component, whereby an environment is identified by an individual label.PerceptBehaviour can make use of the percept data.

Figure 1: Illustrates the perception pipeline.

Besides the data which is unpacked for all game objects by default, e.g., the position, the collider data and so forth, you can specify additional data which should be extracted and packed into a corresponding percept by attaching a Steering Tag to an object. You can easily find out what default data the perception pipeline receives by looking at the API reference page of the SteeringPercept class.

The whole process is automatically done in a way that optimizes the performance of your game or application: The data of a percept is extracted only when it is in the range of at least one agent processing it. The range determining when percepts are relevant is defined in an agent's Steering Filter. Moreover, a Steering Perceiver can utilize optimized spatial structures which additionally improve the access time to relevant percepts.

For now, we have talked only about percepts in general. For our system, we decided to use only the SteeringPercept class. But we made it possible to create a whole different percept type by implementing the IPercept interface. However, if you do that you also need to implement your own perceivers and filters as well.

So, before you are going to re-implement all pipeline components. You have the following possibilities to inject your custom data, like health points etc., into the system so that behaviours can make use of it.

Values array of the Steering Tag.SteeringPerceiver, SteeringFilter and SteeringPercept for both back-end and front-end classes. Then, you just need to call the base methods in overridden methods of your custom classes and to add in your additional code.