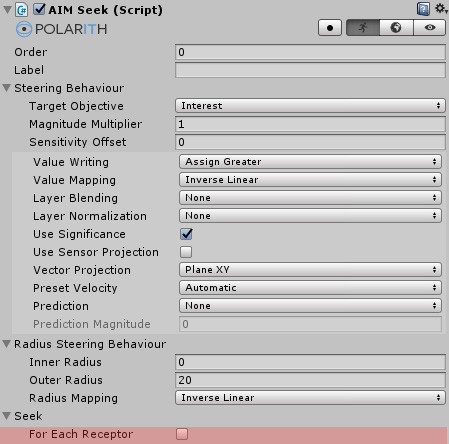

Per default, behaviours are called for each percept only once. Thus, the distance and direction between an agent and one percept is calculated based on the center of the agent. However, by enabling For Each Receptor, it is also possible to base these calculations on each receptor since a receptor can also have a position offset so that it is not located at the agent's center/pivot. Some of our inbuilt behaviours are already supporting this feature like, e.g., Seek. Of course, having For Each Receptor activated is more expensive, whereby the overall cost depends on the sensor's receptor count since the distance and direction calculations must be called for each receptor.

Executing steering behaviours for each receptor is crucial if your sensor model relies on receptors having a position offset like, for example, the line sensor shape.

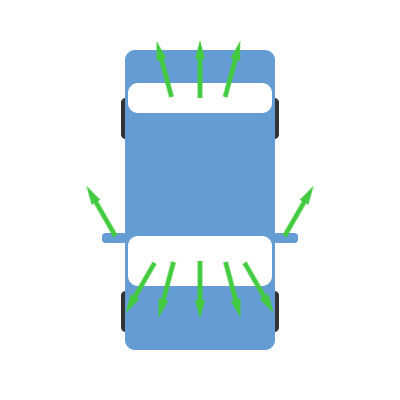

By using For Each Receptor, it is also possible to construct something like the following scenario (which is shown in Figure 1) instead of limiting yourself to a circle-shaped sensor. Imagine you have a car-like agent. Instead of having a car which can perceive everything in every direction in the same quality and quantity, we can model a more realistic sensor for this use case. In reality, you as a driver cannot observe all possible directions from the driver's seat. In fact, when you want to see something behind, you have to use the limited view of your mirrors. Thus, one could model an agent doing the same by creating a sensor as shown in the figure below. Most of the receptors and, thus, a higher precision would be at the front. Some receptors would be positioned at the rear and just one receptor for each side mirror. Assuming that you have an appropriate character controller to handle this agent, the attached behaviours would need to have For Each Receptor enabled to work properly.

Figure 1: A sketch of a car with a possible receptor configuration depicted by green arrows.