The parameter LayerNormalization defines the normalization method for an individual behaviour while blending objective values into the context. Together with the Layer Blending mechanism, the Layer Normalization can provide you superior control when combing different behaviours. The following three methods are available.

| Layer Normalization Type | Description |

|---|---|

None | Objective values remain unchanged. No normalization method is used. |

Intermediate | The results of the behaviour are normalized separately and are then applied to the actual objective values of the context. Note that Layer Blending needs to be used, otherwise, this option would have no effect at all. |

Everything | The results of the behaviour are applied as they are, then every context value of the target objective is normalized. Any behaviour processed after this behaviour is not affected. |

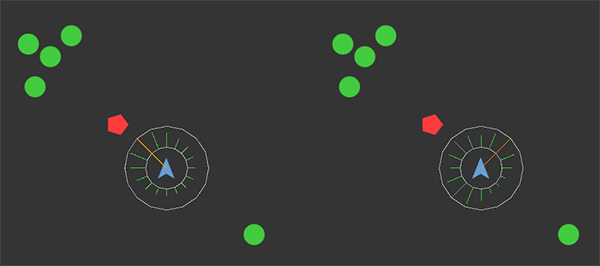

Figure 1: Both agents have a Seek (on green circles) and an Avoid (on the red polygon) behaviour. Since the Seek behaviour uses Addition for writing values, the magnitude generated by the Avoid behaviour is not enough to maneuver the agent around the obstacle. However, the agent on the right uses an Intermediate Layer Normalization. Thus, the Avoid behaviour generated comparable magnitudes and the agent would avoid the obstacle properly. This allows for an easier combination and parameterization of multiple behaviours.

The normalization function which is universally used in Polarith AI does not strictly map everything to a range of [0, 1]. It just ensures that all values are in this range. For example, the following values would not be changed by the normalization: {0.2, 0.7, 0.3}. As against {0.2, 2, 0.3} would be normalized to {0.1, 1, 0.15} instead. Negative values are considered in this process as well.

This has practical reasons: If we would strictly normalize everything to the range of [0, 1], then the magnitude values would loose information and meaning.

Normalization of single behaviours can be useful when it comes to combining multiple behaviours into one objective. A good example can be seen in Figure 1. Without the Layer Normalization, it would be impossible to use the Avoid behaviour in this scenario because the absolute value is dependent on the actual number of interest objects which are close to the agent. So it might happen that the values are between 0 and 4, as against the Avoid behaviour generates magnitudes which are always between 0 and 1. So without Layer Normalization and compared to the Seek, the Avoid magnitudes can be not significant enough.